G.O.O.G.L.E., “Government Online Operation Gathering Legal Evidence”.

Sergey Brin and Lawrence Page—the masterminds behind a huge conglomerate that successfully developed more than a hypertext system that DARPA and NASA helped fund.

Does Google Cache-out on our Data and Privacy?

It’s 2010, I just spent some money I earned cutting lawns on my brand new Linksys 802.11n WiFi dongle to piggyback off a neighbors open WiFi network; oblivious to http/https protocols and open network transmissions. I actually thought of the “Google” acronym used in the title in high school, “blue screen(s) of death” were a weekly thing for me, I had an .iso file and my drivers ready to reboot next to my CRT monitor—starting fresh again for the five thousandths time didn’t bug me. But what did was the catch. When I first thought of what Google probably stood for my friends chuckled and blew it off because I was always “thinking” too much—which is kind of who I am and what I do.

Skepticism was only sprinkled here and there throughout MySpace, forums, and chat rooms so it wasn’t as wide spread as you see and hear it today especially if you didn’t have access to the Highland Forums. Data retrieval and retention started to become very obvious to me even at a young age—the “delete” button was almost my best friend, until it wasn’t.

Related: Googles Coolest Algorithms—that we Know of!

In the next decade, several new algorithmic paradigms may emerge:

Quantum AI: This paradigm harnesses the principles of quantum mechanics to enhance artificial intelligence capabilities, enabling faster processing and problem-solving through quantum superposition and entanglement. Quantum AI aims to tackle complex optimization tasks and large-scale data analysis more efficiently than classical algorithms.

Neuromorphic Computing: Drawing inspiration from the architecture and functionality of the human brain, neuromorphic computing employs spiking neural networks (SNNs) and artificial synapses to process information. This approach enhances energy efficiency and parallel processing capabilities, making it suitable for real-time applications in robotics and AI. The term was popularized by Carver Mead in the 1980s, who pioneered analog circuits that mimic biological neural systems.

Federated AI: This decentralized approach allows multiple devices to collaboratively train machine learning models while keeping data localized. By utilizing techniques such as differential privacy, federated AI enhances user privacy and reduces the need for centralized data storage, making it particularly relevant in sensitive applications like healthcare.

Evolutionary Algorithms: Inspired by natural selection, these algorithms iteratively optimize solutions by simulating processes such as mutation, crossover, and selection. They are particularly effective for solving complex, multi-objective problems where traditional optimization methods may struggle.

These emerging paradigms represent a significant shift from current techniques used by search engines like Google, which primarily rely on traditional machine learning models and heuristics to deliver relevant information. By integrating these advanced methodologies, future systems may achieve unprecedented levels of efficiency, adaptability, and intelligence in information retrieval and processing.

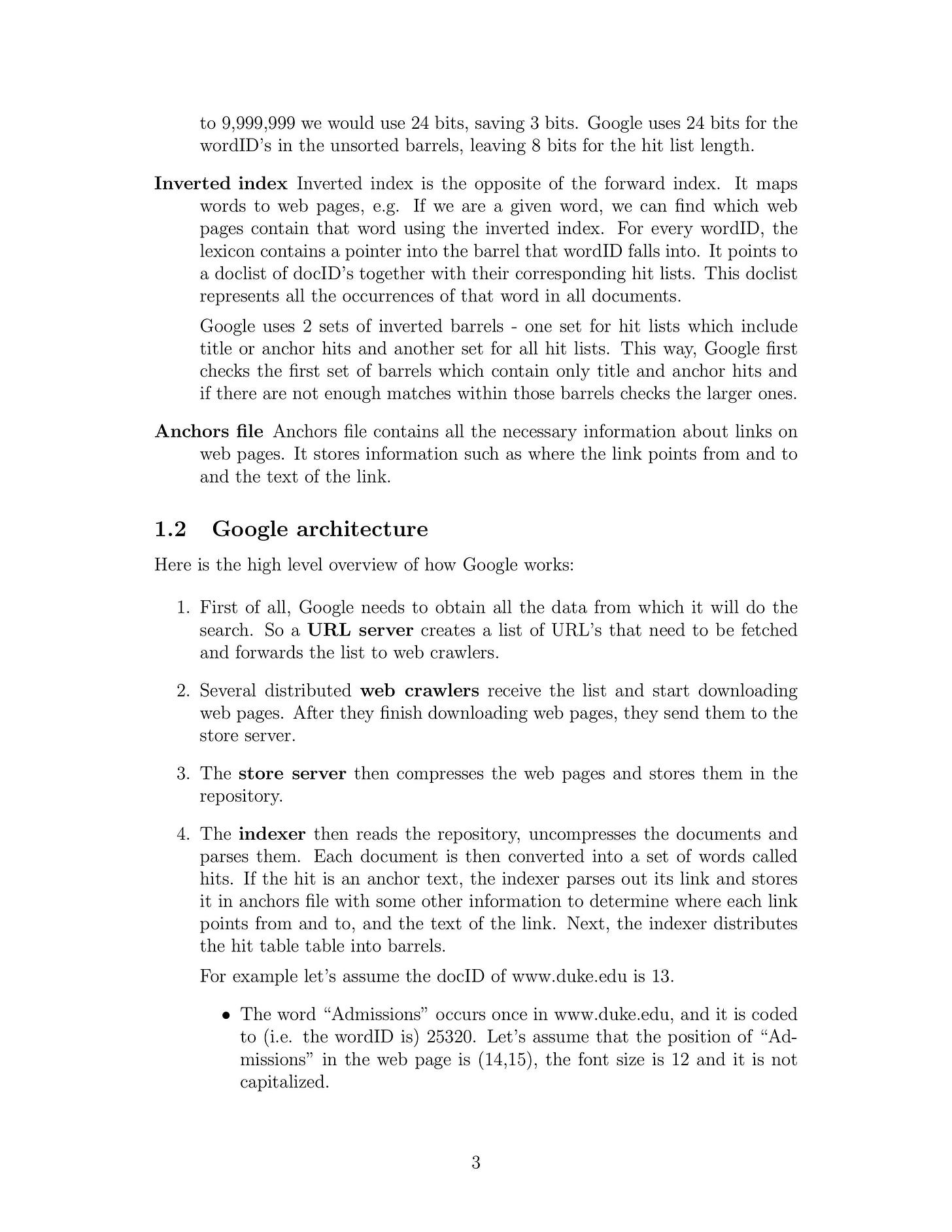

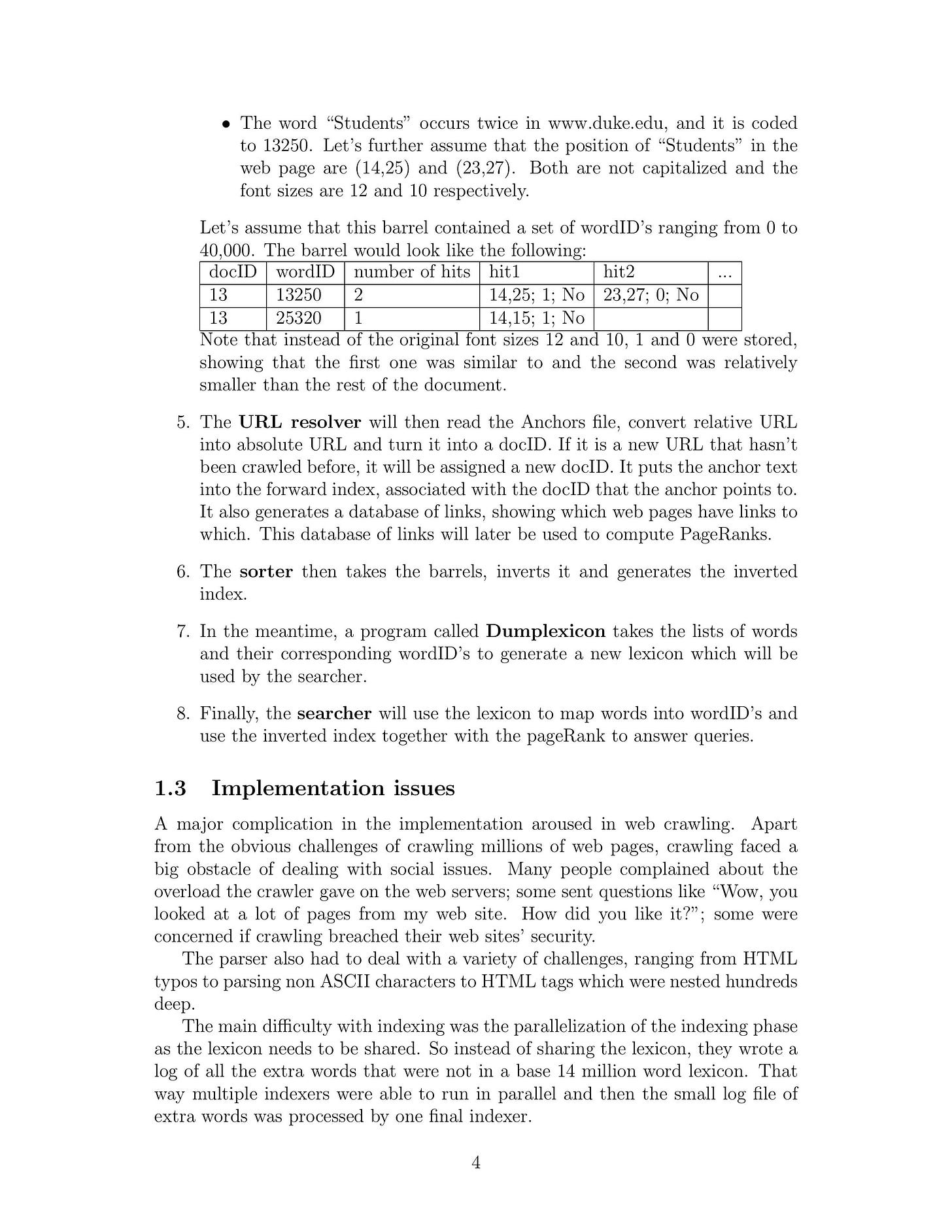

The following information (below) is a bit outdated in terms of todays techniques on how Google or search engines provide a user with relevant information.

The Anatomy of a Large-Scale Hypertextual Web Search Engine (Part 1)

What’s the Best Search Engine to Use These Days?

Large Language Models (LLMs) like Perplexity and Gemini are your best go to for searches theses days; which are often developed and funded by private entities which also pose significant concerns due to their potential misuse. Here are some key points:

Funding and Development

LLMs are developed by companies and research institutions, some of which receive substantial funding. For instance, OpenAI, a leading LLM developer, has received significant funding, making it one of the most heavily funded LLM developers worldwide.

Potential Misuse

The capabilities of LLMs, while powerful and transformative, also raise serious concerns about their potential misuse. IARPA, the Intelligence Advanced Research Projects Activity, has highlighted the vulnerabilities and threats associated with LLMs, including their potential for generating misinformation, eliciting sensitive information, and concealing threats to users. These models can be exploited for malicious purposes such as chemical development, malware creation, and other harmful activities.

Biases and Threats

IARPA's BENGAL program is specifically designed to understand LLM threats, quantify them, and develop novel methods to address these vulnerabilities. The program aims to detect and mitigate biases, toxic outputs, and other harmful behaviors that LLMs can exhibit. This includes characterizing vulnerabilities such as prompt injections, data leakage, and unauthorized code execution.

Related: IARPA—REASON

Security Risks

The intelligence community is particularly concerned about the safe use of LLMs, given their potential to introduce security risks. IARPA has issued requests for information to elicit frameworks for categorizing and characterizing these risks, emphasizing the need to avoid unwarranted biases and ensure the accuracy and reliability of LLM outputs.

Regulatory and Mitigation Efforts

To address these concerns, IARPA is working on technologies that can probe LLMs for nefarious code and applications, similar to how anti-virus software works. This includes developing methods to detect and characterize LLM threats and vulnerabilities, whether in "white box" or "black box" models.

So while LLMs offer immense potential for advancing various fields, their open-source nature and powerful capabilities also open doors to significant risks, including malicious uses such as chemical development and malware creation. Efforts by organizations like IARPA are underway to mitigate these risks and ensure the safe and responsible use of LLM technologies.

The Anatomy of a Large Scale Hypertexual Web Search Engine (Part 2)

What else does Google and NASA do?

NASA and Google have established significant collaborations that extend beyond the realm of Google Earth and Maps, particularly in the areas of space exploration and environmental monitoring.

One notable example is their partnership to improve the tracking of local air pollution. This collaboration, which began with an existing Space Act Agreement, has been expanded to develop advanced machine learning algorithms that combine NASA data with Google Earth Engine data. This integration aims to generate high-resolution air quality maps in near real-time, aiding local governments in their air quality management efforts. Additionally, NASA and Google are working together to enhance the accessibility and usability of NASA's science data through the Google Cloud Platform and Google Earth Engine. This includes the incorporation of NASA data sets such as the GEOS-CF and MERRA-2 into the Earth Engine Catalogue, which are updated daily to help map and predict regions with poor air quality. While specific details about DARPA's contracts and collaborations are not always publicly disclosed due to their sensitive nature, it is known that DARPA has been involved in various research initiatives, including those related to geoengineering and environmental monitoring. However, these are often subject to strict confidentiality and are not legally allowed to be disclosed.

Regina Dugan, who served as the 19th director of DARPA from 2009 to 2012, later transitioned to Google, highlighting the movement of talent and expertise between these organizations. This transition underscores the ongoing collaboration and exchange of knowledge between government agencies like DARPA and private tech companies like Google.

Summary

Google is actually one of my top choices for inquiries, but it really depends on what I’m searching for, I tend to use all search engines, Bing, Ecosia, Startpage.. etc. Your data is being gathered by every sensor inside your device of choice, every website or application you use, someway, some how. This wasn’t to hate on Google or knock my country. Sometimes our algorithms shouldn’t know we forgot how to spell a word or need to do some basic math that we don’t have time to do and it determines how “sharp” we are, privacy was a right. Once upon a time.

@DARPA, @NASA, @GOOGLE, #DATA

Recommend | Xybercraft ™